Backgound

With the advent of language models, text generation is becoming viable enough to be used in writing interfaces to provide synchronous next-phrase suggestions.

While there is a rapid progress in NLP technologies that enable such interfaces, research in understanding writer’s interactions with such technologies and their effects is still emerging.

Therefore we decided to shed light on the writer’s cognitive processes while interacting with suggestions.

Research Questions

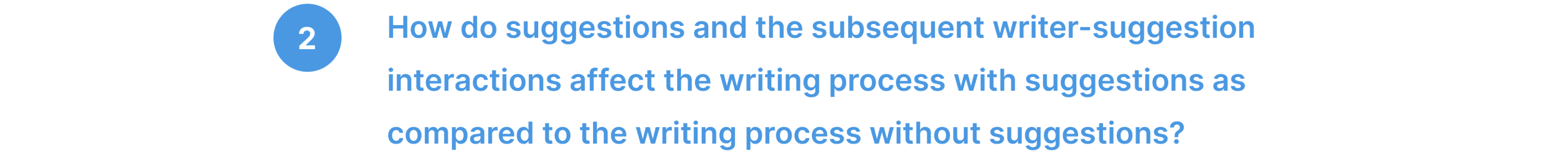

While extensive research has been conducted on this topic from a behavioral perspective, there is limited exploration from a cognitive viewpoint, leading us to our Research Question 1 (RQ1).

We also sought to understand how the presence and content of suggestions impact the writing process, which inspired our Research Question 2 (RQ2).

Language models often inherit biases and dominant views from their training text corpus. This issue is particularly relevant as generative AI becomes integral to our lives. Understanding how these biases affect human interaction with technology, especially in the writing process, guided us to our Research Question 3 (RQ3).

Research Methodology

For this research, it is crucial to observe, collect, and analyze participants' interactions while writing. Consequently, we brainstormed extensively on what and how to ask our participants to write.

Why movie review?

TThe following reasons contributed to the decision to finalize movie reviews as the core task for users in this research:

Long & time consuming

Writing movie reviews involves expressing one’s opinion and arguing for it

Missing key steps

Movie reviews have a star rating which will give us a linear scale of sentiment that may be used to create a variety of writer -suggestion misalignments.

Complex & Daunting

Countless movie reviews exist on the internet, along with available datasets, giving us a rich resource to train a language model.

How should we ask them to write?

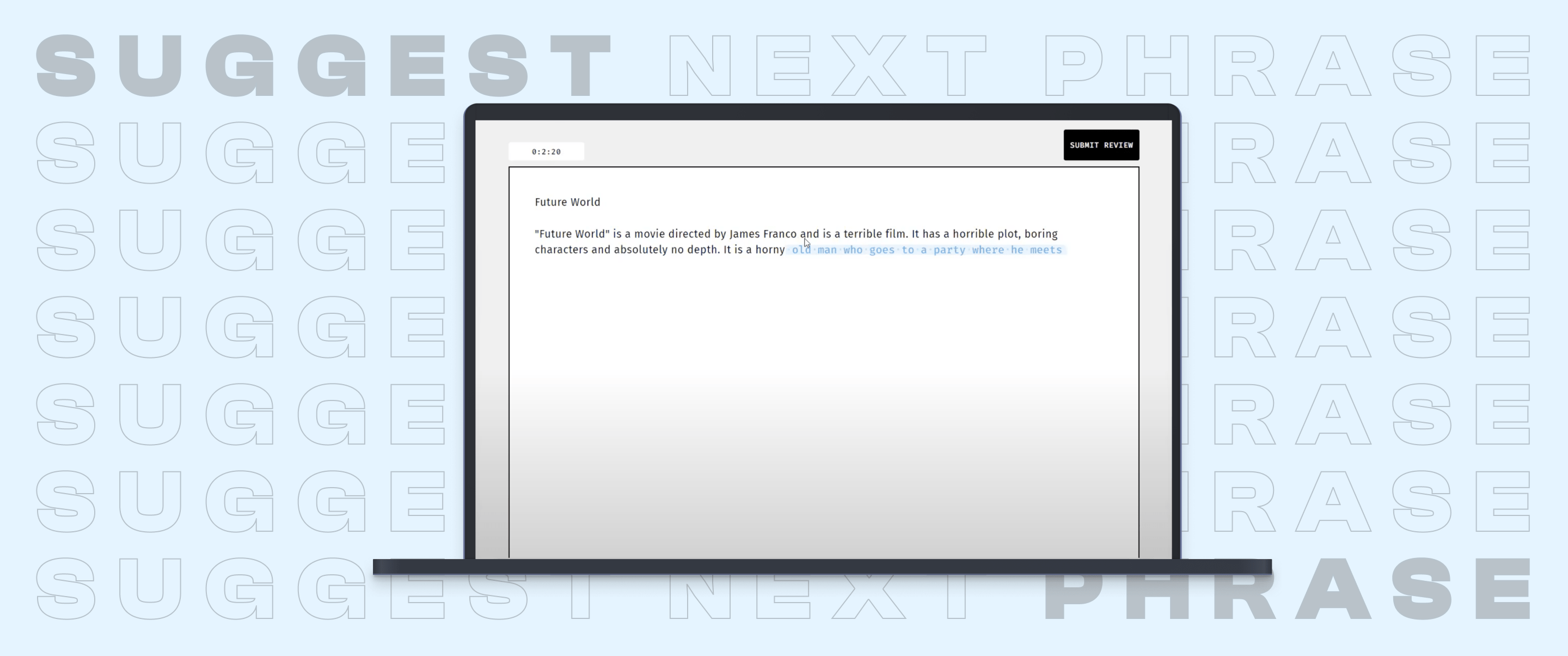

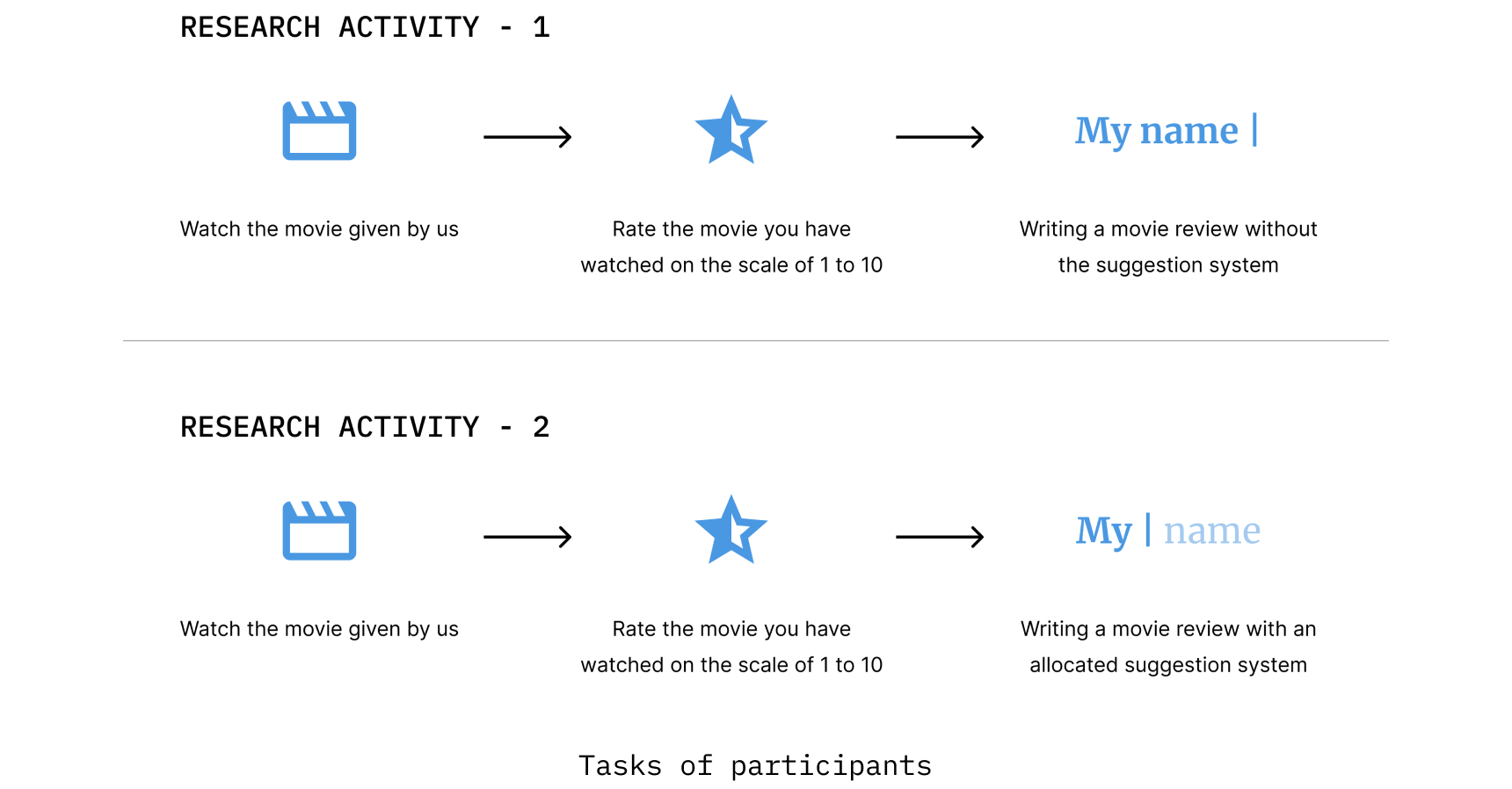

We asked participants to complete two tasks: First, they watched a movie we selected and wrote a review without any suggestions. In the second task, they watched a different movie, this time writing their review with suggestions provided.

We conducted our research with 14 L2 participants, individuals for whom English is a second language rather than their native tongue.

How should we collect data?

We asked participants to write their movie reviews in our presence. Due to COVID-19, the setup included two researchers and one participant, who shared their screen while composing the review. We implemented a concurrent think-aloud protocol, encouraging participants to verbalize their thoughts during the writing process. Following this, we conducted a retrospective think-aloud protocol, where we recorded their writing session and later played it back for them, asking follow-up questions. The entire session was recorded, and we created memos during the process.

Data Analysis

We conducted thematic analysis collaboratively using the Atlas.ti tool.

Findings

Following were some of the findings along with what users were suggested and what did the finally wrote to back the findings:

Contribution of suggestions to the processes of composition

Abstracted themes from suggestions to inspire new sentences

Adapted sentence structures from suggestions in their own writing

Copied suggestions that matched their working memory

Evaluating Suggestions

Independent evaluation of suggestions

Evaluation with respect to text written so far

Evaluation with respect to the Working Memory State (WMS)

Evaluation influenced by the beliefs about the suggestion system

Effects on the writing process

Alignment between suggested text and the writer's intent influences their writing process

Relevant suggested text shapes their next writing steps

Suggestions can distract users based on their current writing stage

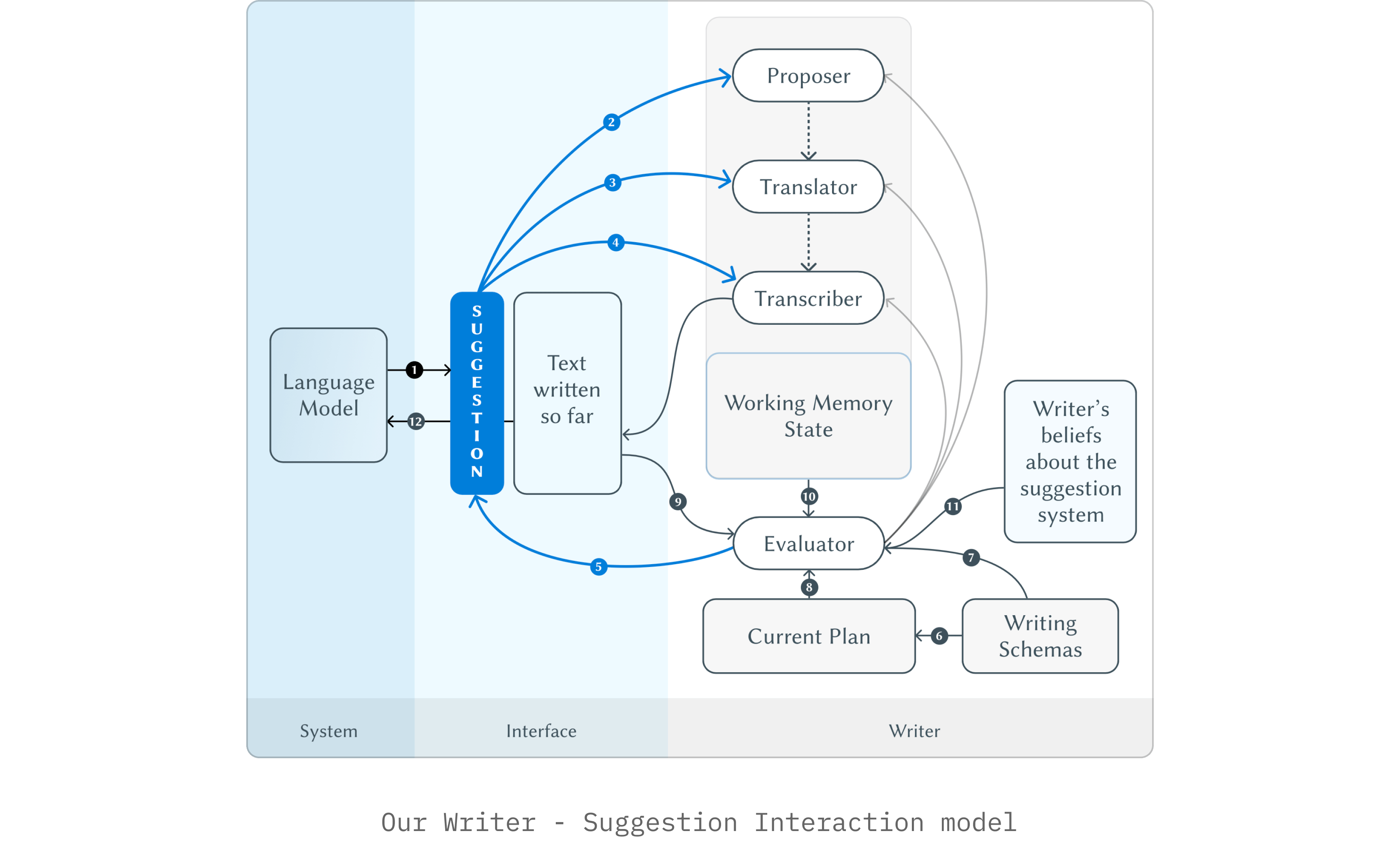

Model

Based on our findings, we propose a model that builds upon the categories and concepts proposed by Hayes and articulates the findings of our study. Following is the image of the model that we proposed:

Design Opportunities

We believe future systems can leverage the above research findings and following design implications that could be helpful in building effective Human-AI suggestive systems in future:

Strategic Sampling

Strategically controlling sampling in the language model to suggest phrases that will aid writers with more ideas (what to say) and language (how to say it).

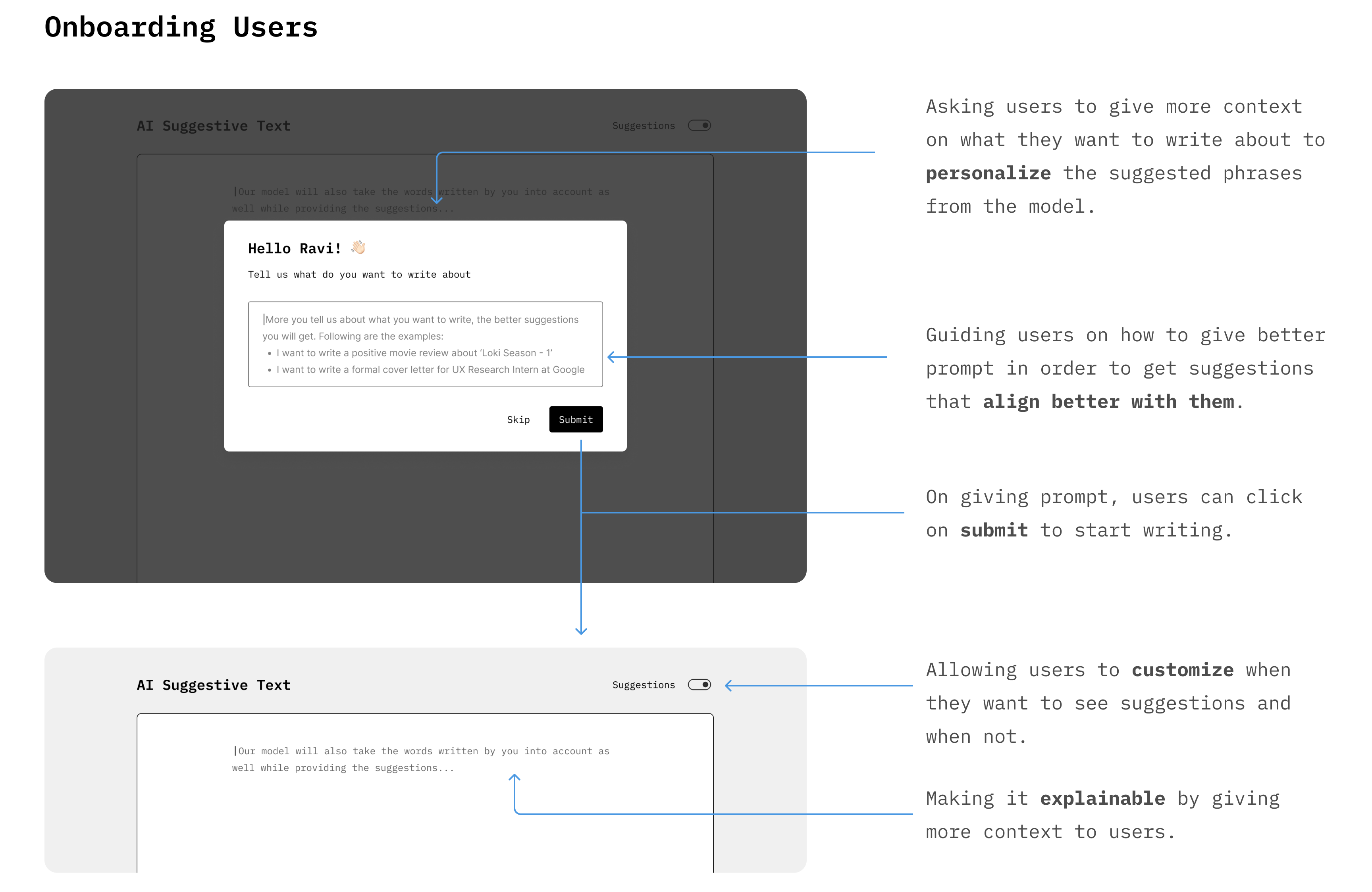

Personalizing Models

Personalizing the suggestion models to avoid generic suggestions and reduce evaluation time.

More customization

Giving users freedom to select for which cognitive process (proposer, translator & transcriber) they need suggestions, and when.

Explainable AI

Making the suggestion AI model more explainable so that users can collaborate better to get more relevant suggestions.

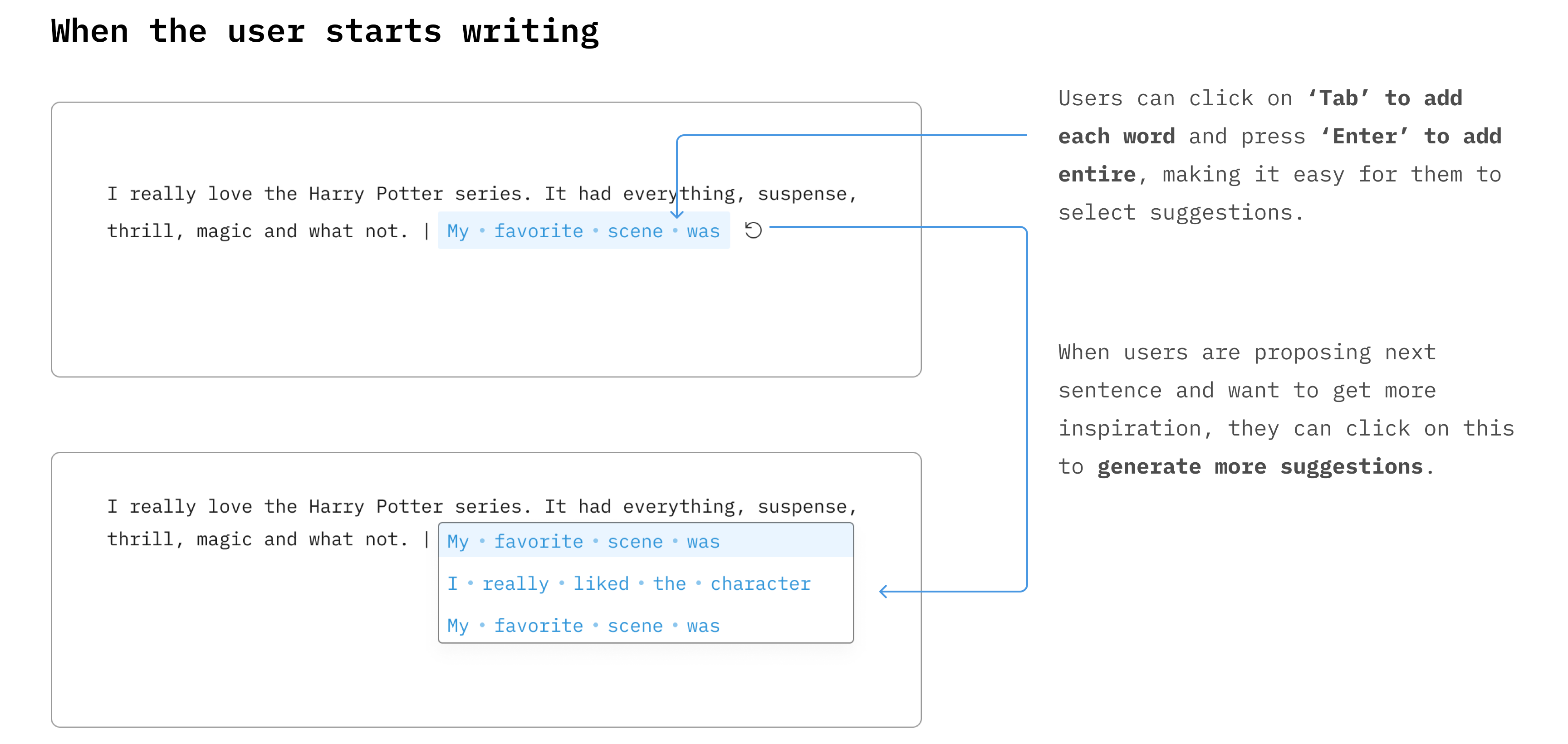

Seamless selection

Making the process of extracting some phrases from the suggestive text seamless.

Final Designs

Building on the identified design opportunities, I created the following screens to illustrate a human-centered approach for AI-powered next-phrase suggestion systems: